Nvidia 40 series will be launching soon. The cards in the new series are going to be significantly more power-hungry than the RTX 20 or the RTX 30 series. Among all GPUs, including AMD’s cards and the new Intel DGPUs, the power requirement of the new RTX 40 series is going to be the most noticeably unconventional.

In this article, we are going to talk about the increasing power requirements of the new GPUs and how it’s simply bad. If you want the rumored specs of the new Ada Lovelace stack, here’s a handy list by known leaker kopite7kimi:

- RTX 4090, AD102-300, 16384FP32, 384bit 21Gbps 24G GDDR6X, 450W

- RTX 4080, AD103-300, 10240FP32, 256bit (?18Gbps 16G GDDR6?), ?420W

- RTX 4070, AD104-275, 7168FP32, 160bit 18Gbps GDDR6 10G, 300W

Question marks mean uncertainty. Note that if the 450W is the reference, then board partners will likely make GPUs consuming upward of that, so anywhere from 500W to 600W is fair game for the RTX 4090 partner video cards. The RTX 4090 Ti, therefore, might be even higher at 800W or more.

Rumors should always be taken with a grain of salt. A good bit from reputed leakers does fall in place, but not all of it does. For example, as per the above list, the RTX 4090 is significantly more power-efficient than the 4070, it just makes no sense. Between the 4080 and the 4090, too, the difference in specs alone is too high to only have a 30W increase (60% more CUDA cores but only 7% more power requirement). Most likely, the 4090 will be upward of 500W at least – or less likely, the other two will be lower in their power requirements.

Another tweet from the same account also talks about a 900W TGP GPU but it could just be a test card from Nvidia – though it does hint at Nvidia’s capabilities and possibly what the future has in store for us.

Transient power spikes

Higher power requirements are bad. Let’s just get that established first. But why? Let’s say you have access to cheap or free electricity, surely, it’s all good in your case then, right? Wrong.

These are what we call the “average” power requirements. During high-end gaming, you can easily hit transient power spikes. These are milliseconds-long periods of time where the GPU demands an insanely large amount of power to draw a frame. Maybe a large texture needed loading, maybe there were too many physics calculations to be done in the frame, and so on.

GamersNexus did an amazing video on explaining what they called the “brewing problem with GPU power design” – transients. The higher-end cards are already hurting people’s computers and having them upgrade their PSUs to those used for multi-GPU rigs.

Transient power spikes have been a part of gaming for a while now. In lower- and mid-range GPUs, these spikes are also lower, as the spike is only proportional to the average power draw of the card. These lower spikes can easily be handled by the power supply. For example, a GTX 1660 Ti or an AMD RX 580 will both work just well with a 500-600W PSU even during power spikes.

Their average consumption is so low that even a spike of 100% more power is something your PSU can manage. But things quickly become very bad when we discuss the same for more power-hungry GPUs. PSU shutdowns during gaming are not a rare phenomenon in RTX 3090 and 3090 Ti. With the 40 series increasing the power requirements further, it only makes transient power spikes a much bigger threat.

You will likely need 1.5KW (that is 1,500W) PSUs to run the RTX 4090 without the fear of abrupt shutdowns due to transient power spikes. Note that a PSU can handle more power through it than its rated wattage.

The true cost of rising power requirements

When the world is already halfway through an energy crisis – it’s a no-brainer why the demand for more electricity is considered bad. This is the reason why Ethereum is moving to a non-mining consensus and why Bitcoin received so much flak for consuming so much electricity just to keep its network safe.

If we also encourage higher wattage requirements to render more in-game frames, how are we better than those miners? If we also become selfish so that even though some don’t have access to basic electricity and we buy 500W GPUs for our new systems, how are we better than those GPU scalpers?

Down this road, we as gamers run the risk of becoming what we hate, without even acknowledging it. But that’s not the full picture. More power-hungry video cards are larger (as they have more processing cores) and have other needs as well. This also increases the overall cost of gaming for you.

All that being said, it’s very rare that gamers pay attention to their power usage while gaming. It’s just not something most of us factor in. It’s also hard to calculate. A lot of gamers will still go for the fastest and newest card on the market throwing all power concerns to the wind. Energy costs truly need more coverage in general.

Nobody wants to have a 4K gaming rig that only works with a three-phase circuit and an AC unit just to cool it down.

Apart from risking becoming as power-inefficient as Bitcoin miners or as selfish as GPU scalpers, gamers buying GPUs with higher power requirements doesn’t just mean they pay more for electricity, but it also means that they need to:

- Upgrade the case (as these are larger cards and will cause sagging),

- Invest more in cooling (more power equals more heat), and

- Get a beefy PSU (transient power spikes for a high-end 40 series GPU can only be handled by a PSU upward of 1,000W).

Is true innovation dead?

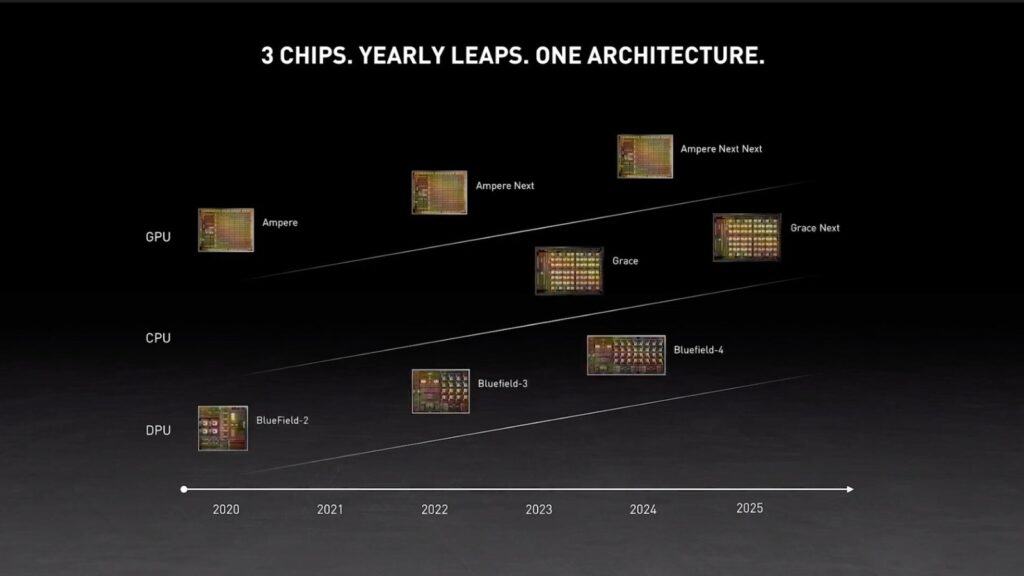

A new product generation introduces new features. Higher counts don’t really classify as a newer generation in most cases. Higher clock speed, higher CUDA core count, more GDDR6 video memory, etc. are all good – but with it, the power consumption is also increasing.

So, when Nvidia has to up their power limits for the performance improvements, you really wonder whether it’s a new generation of cards or not. In many ways, the RTX 40 series is just going to be a continuation of the current RTX 30 series with more power shoehorned in. This sideways evolution that focuses on making things bigger to give more performance is not really what tech evolution usually looks like.

Delivering the same performance or more within the same price and power usage is a better way to measure generations in my opinion.

At this point, you wonder if true innovation is dead.

Instead of better component-level or software-level technological improvements, we’re getting new cards that have increased performance simply because they draw more power and pack more cores. How is that a new generation of cards, and not a more high-end SKU line from the previous stack?

Is it time for dedicated PC circuits?

A circuit box decides when to trip and how to distribute electricity throughout a system, such as your home. It has a breaker, which goes off when it sees a surge. You experience this when you run a heavy appliance or more than one heavy appliance for some reason from the same socket or power strip.

Typically, homes don’t have dedicated circuits for PCs as they do for washing machines and ACs. If things keep going down the same track, it might soon be time when homes need to install PC-only circuits to deliver power to your PC only.

This will make sure any outage is a lot less likely and more power headroom is always present in case your GPU wishes to draw more power because the reflections are pretty hard to render in a given frame.

A couple of important notes before we conclude the section:

- PC-specific circuits were common in the past, especially in business buildings. That was a different case, of course, as buildings back then weren’t ready for the increasing power usage of computers, and the electrical wiring was all set up never expecting the then-insane power draw of a then-legendary Pentium dual-core.

- This is largely a US-specific problem. Most countries don’t split their 240V (or 220V) main input into two as it happens in US households – essentially making the voltage 120V (half of 240V). Drawing more power from the same circuit without overloading it is easier in most of the remaining world including Europe and Asia. It still means you consume the electricity, just that there’s a lower risk of tripping the breaker from a short-lived unexpected power demand from the PSU.

Dedicated PC circuits in the average home might become the new normal but it’s impossible to look away from the fact that it’s also a precursor to something bad. We haven’t even started on the means to improve a GPU’s power efficiency. Why should the consumer be worrying about circuits just to maintain consistent gaming performance?

What demand are we expecting for these new GPUs?

Nvidia’s 40 series GPUs might be very power-hungry. But does that mean the demand for these GPUs will suffer? Here are a few facts that we know:

A world in conflict

- Inflation rates are climbing

- Countries are failing to meet their growth forecasts

- Gas and oil have seen a huge debacle due to the global conflict

All this means that people are paying more for food and other basic amenities, including much more for fuel. These are economic slowdowns and consumer products such as graphics cards, a TV, or a home theater, for example, see lower sales in these times as people’s expenses increase and they become more savings-minded.

Price normalization

The mining boom has come to a screeching halt with Ethereum’s mining power diluting into oblivion as more and more miners see:

- The quickly-dropping charts

- Their precious cryptocurrency holdings all in red (alongside other savings such as stocks)

- A dark future ahead because Ethereum mining is ending in a few months

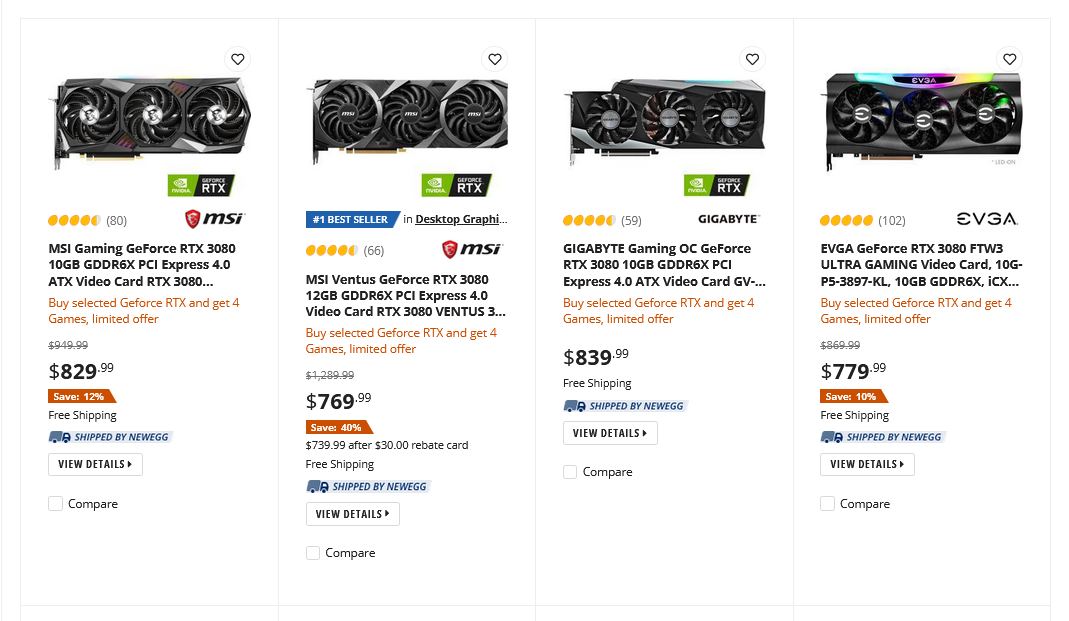

This has contributed to an influx of GPUs into the market, with the RTX 3080 12GB commonly selling for under $800. This is great news. Prices are coming back to the (inflation-adjusted) normal levels. In some cases, AMD cards were selling under their MSRP even, on Newegg and Amazon (new condition).

The demand for the 40 series

Let’s circle back to the original question. What is the demand for the RTX 40 series going to look like? Paying more for electricity and PC upgrades is one thing, but the cards themselves will see a higher than usual MSRP as far as rumors are to be believed. Nvidia is most likely not trying to sell the RTX 4090, for example, at the same prices as the RTX 3090 ($1500 – which is already too high compared to the much more cost-effective RTX 3080).

That’s not a lot of money in these trying times.

Add to that the used GPUs coming into the market. Do you think the demand will be high?

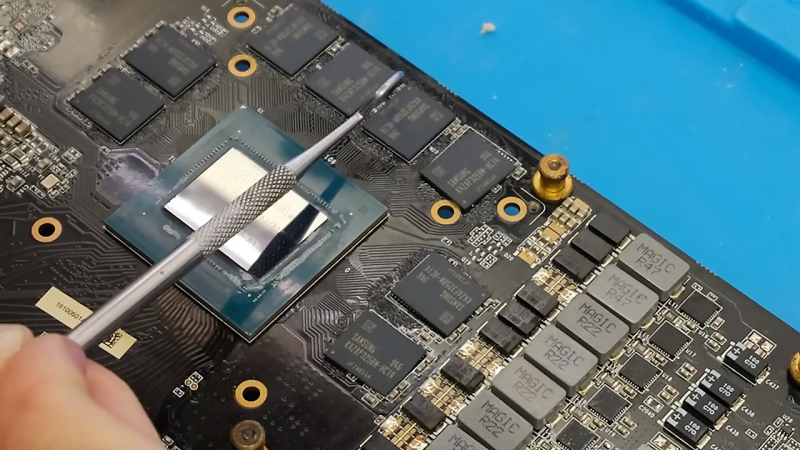

A tip about used mining GPUs: Regardless of what you have believed so far, know that extensive mining on an overclocked card does not introduce any problems to it. At most you might need to get it repasted if it’s been mining for several years nonstop. The silicon lottery, your in-game settings, game optimization, etc. still determine the likelihood of running into problems with a certain GPU or its performance vis-à-vis another GPU of the same brand and line.

Nvidia will still sell out its initial stock of the RTX 40 series when it launches. There are simply too many enthusiasts, rich gamers, and gamers with no video cards out there. But the overall demand can be weaker compared to the previous series due to the economic slowdown and the price normalization of other GPUs. Intel’s foray into the hotly contested video graphics market might also help, especially for lower-end rigs.

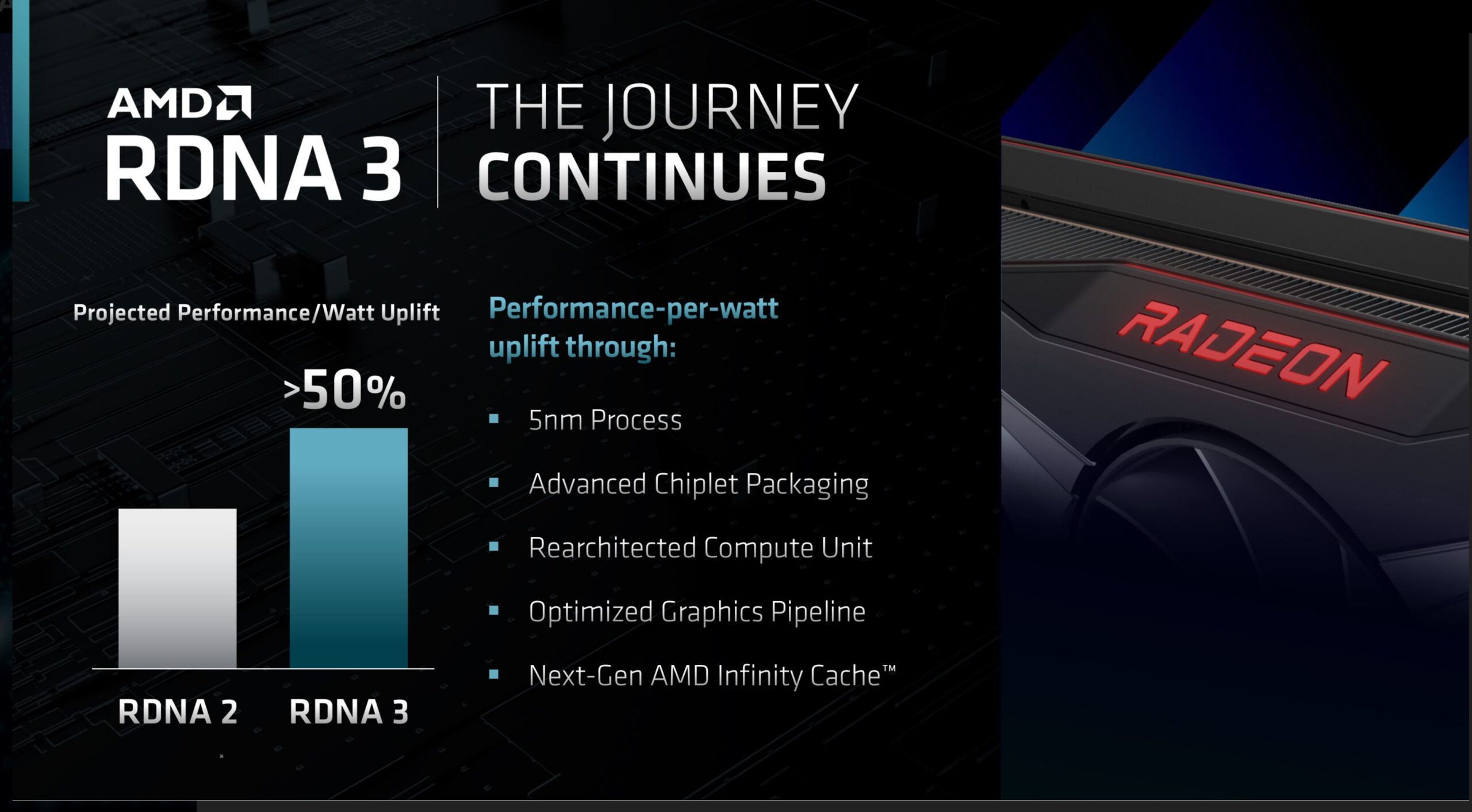

What about AMD?

Honestly, to me so far, AMD’s new line of GPUs looks more promising in terms of power efficiency. Even if I won’t be getting the best ray-traced frames but paying $500 less for pretty much 50-60% of the 40 series power draw, I will be happy.

Perhaps Nvidia needs a kick like this where gamers actively call them out for making superficially power-hungry GPUs without the need or demand just to maintain their “top spot” in an industry of two (well, now three, but come on) – and switch to AMD.

Who knows, this might revert things back to the time of the Nvidia 16 or 20 series by having them put more effort into making their cards more power-efficient. This will only happen, of course, if gamers switch to AMD.

A big corporation such as Nvidia only cares about if the sales are happening, not about who is buying or under what circumstances. Nothing remarkable will happen if gamers only show disdain and the sales keep coming in. Switching to more power-efficient GPUs from AMD or even Intel can be the much-needed reminder for Nvidia to rethink whether it is more important to keep their top spot in the speed/FPS charts or to keep their business running.

What is the way out?

Is it really time to consider the 15W Steam Deck? Hell, no. Older GPUs? Sure, Elden Ring won’t look as good, but older titles will work just fine. Or cloud gaming? Definitely, a competitor but not owning your hardware might be a little uncomfortable for a full-time gamer.

Given AMD and Intel come out with reliable and decent cards with power-efficiency that beats Nvidia by a high margin – we won’t have the need to answer this question. They will mop the floor.

But the problem is bigger than that. There’s nothing stopping the whole industry from going for more power requirements and board partners strapping more cool stuff and higher overclocks to increase it further, even in AMD cards or American variants of Intel cards (so far, only the Asian market specific board partner GUNNIR has launched the dedicated Alchemist GPUs).

So, what is the way out?

Given enough engineers, no problem is too big to solve in the world of graphics cards and gaming. We have seen this time and again. All the teams don’t need to get together and set up a 100-person Zoom meet – that will just be utter chaos as we all know. They just need to invest more in power research and development.

GPU power usage is becoming a real problem and affecting people’s lives. Maybe it’s time to have a dedicated branch for power R&D now in teams Green, Red, and Blue.

Once Nvidia and AMD understand this, I’m sure we will be seeing improvements in the power efficiency of the new cards. For now, though, all I will recommend is staying as far away from 500-600W GPUs as possible unless you want to upgrade your case, your PSU, your cooling, and oh, your side income to pay for all that extra electricity, to render 50 more frames per second.

Corporate decisions tend to be made around greed more often than not. What’s happening with Nvidia’s upcoming RTX 40 series, more specifically its average power requirements, is clearly a testament to that.